Function approximation with RBFN

Neural Networks course (practical examples) © 2012 Primoz Potocnik

PROBLEM DESCRIPTION: Create a function approximation model based on a measured data set. Apply various Neural Network architectures based on Radial Basis Functions. Compare with Multilayer perceptron and Linear regression models.

Contents

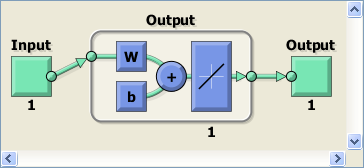

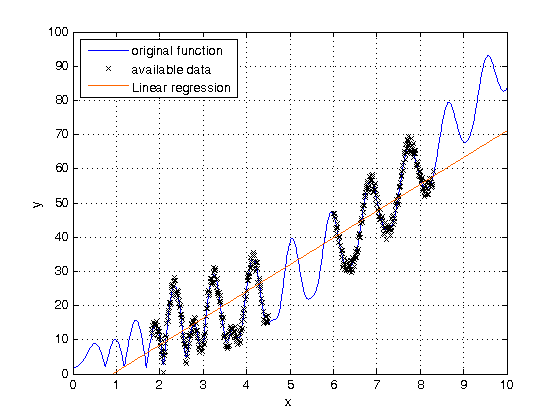

Linear Regression

close all, clear all, clc, format compact % generate data [X,Xtrain,Ytrain,fig] = data_generator(); %--------------------------------- % no hidden layers net = feedforwardnet([]); % % one hidden layer with linear transfer functions % net = feedforwardnet([10]); % net.layers{1}.transferFcn = 'purelin'; % set early stopping parameters net.divideParam.trainRatio = 1.0; % training set [%] net.divideParam.valRatio = 0.0; % validation set [%] net.divideParam.testRatio = 0.0; % test set [%] % train a neural network net.trainParam.epochs = 200; net = train(net,Xtrain,Ytrain); %--------------------------------- % view net view (net) % simulate a network over complete input range Y = net(X); % plot network response figure(fig) plot(X,Y,'color',[1 .4 0]) legend('original function','available data','Linear regression','location','northwest')

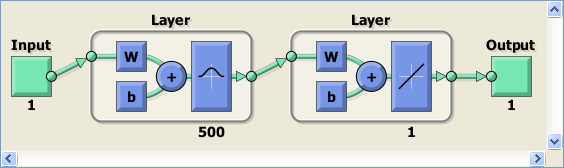

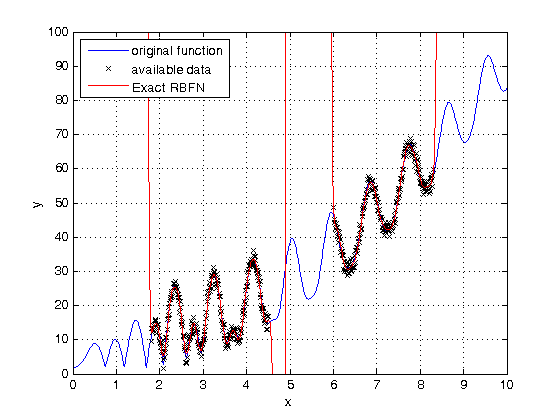

Exact RBFN

% generate data [X,Xtrain,Ytrain,fig] = data_generator(); %--------------------------------- % choose a spread constant spread = .4; % create a neural network net = newrbe(Xtrain,Ytrain,spread); %--------------------------------- % view net view (net) % simulate a network over complete input range Y = net(X); % plot network response figure(fig) plot(X,Y,'r') legend('original function','available data','Exact RBFN','location','northwest')

Warning: Rank deficient, rank = 53, tol = 1.110223e-13.

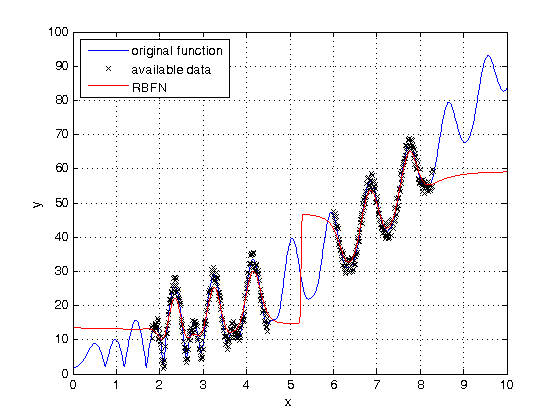

RBFN

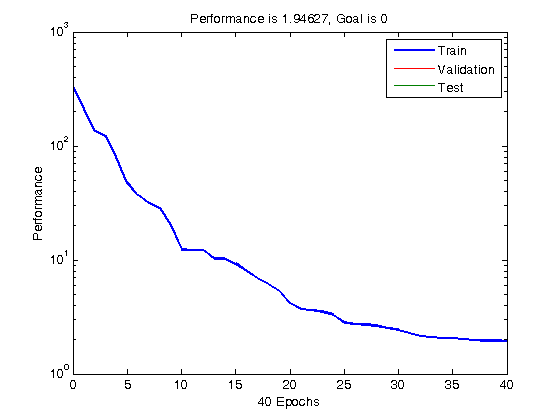

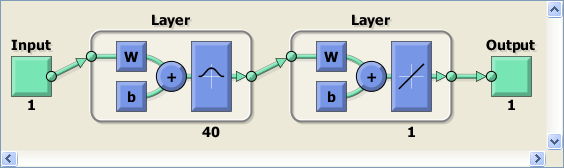

% generate data [X,Xtrain,Ytrain,fig] = data_generator(); %--------------------------------- % choose a spread constant spread = .2; % choose max number of neurons K = 40; % performance goal (SSE) goal = 0; % number of neurons to add between displays Ki = 5; % create a neural network net = newrb(Xtrain,Ytrain,goal,spread,K,Ki); %--------------------------------- % view net view (net) % simulate a network over complete input range Y = net(X); % plot network response figure(fig) plot(X,Y,'r') legend('original function','available data','RBFN','location','northwest')

NEWRB, neurons = 0, MSE = 333.938 NEWRB, neurons = 5, MSE = 47.271 NEWRB, neurons = 10, MSE = 12.3371 NEWRB, neurons = 15, MSE = 9.26908 NEWRB, neurons = 20, MSE = 4.16992 NEWRB, neurons = 25, MSE = 2.82444 NEWRB, neurons = 30, MSE = 2.43353 NEWRB, neurons = 35, MSE = 2.06149 NEWRB, neurons = 40, MSE = 1.94627

GRNN

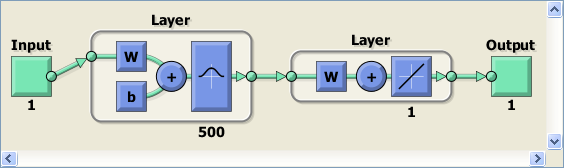

% generate data [X,Xtrain,Ytrain,fig] = data_generator(); %--------------------------------- % choose a spread constant spread = .12; % create a neural network net = newgrnn(Xtrain,Ytrain,spread); %--------------------------------- % view net view (net) % simulate a network over complete input range Y = net(X); % plot network response figure(fig) plot(X,Y,'r') legend('original function','available data','RBFN','location','northwest')

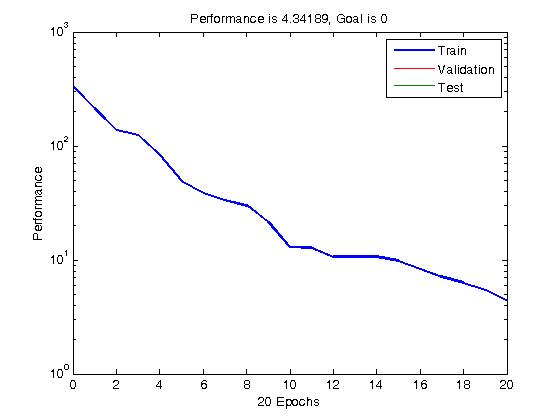

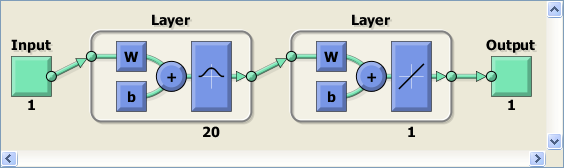

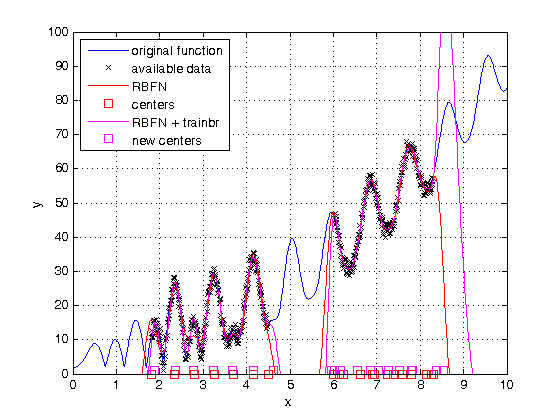

RBFN trained by Bayesian regularization

% generate data [X,Xtrain,Ytrain,fig] = data_generator(); %--------- RBFN ------------------ % choose a spread constant spread = .2; % choose max number of neurons K = 20; % performance goal (SSE) goal = 0; % number of neurons to add between displays Ki = 20; % create a neural network net = newrb(Xtrain,Ytrain,goal,spread,K,Ki); %--------------------------------- % view net view (net) % simulate a network over complete input range Y = net(X); % plot network response figure(fig) plot(X,Y,'r') % Show RBFN centers c = net.iw{1}; plot(c,zeros(size(c)),'rs') legend('original function','available data','RBFN','centers','location','northwest') %--------- trainbr --------------- % Retrain a RBFN using Bayesian regularization backpropagation net.trainFcn='trainbr'; net.trainParam.epochs = 100; % perform Levenberg-Marquardt training with Bayesian regularization net = train(net,Xtrain,Ytrain); %--------------------------------- % simulate a network over complete input range Y = net(X); % plot network response figure(fig) plot(X,Y,'m') % Show RBFN centers c = net.iw{1}; plot(c,ones(size(c)),'ms') legend('original function','available data','RBFN','centers','RBFN + trainbr','new centers','location','northwest')

NEWRB, neurons = 0, MSE = 334.852 NEWRB, neurons = 20, MSE = 4.34189

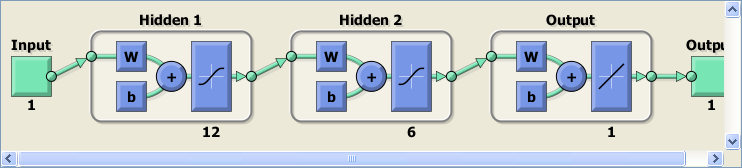

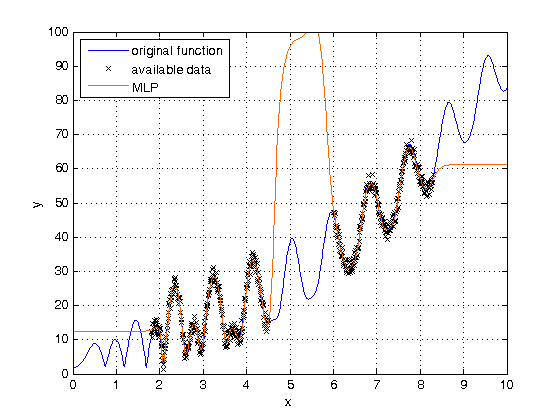

MLP

% generate data [X,Xtrain,Ytrain,fig] = data_generator(); %--------------------------------- % create a neural network net = feedforwardnet([12 6]); % set early stopping parameters net.divideParam.trainRatio = 1.0; % training set [%] net.divideParam.valRatio = 0.0; % validation set [%] net.divideParam.testRatio = 0.0; % test set [%] % train a neural network net.trainParam.epochs = 200; net = train(net,Xtrain,Ytrain); %--------------------------------- % view net view (net) % simulate a network over complete input range Y = net(X); % plot network response figure(fig) plot(X,Y,'color',[1 .4 0]) legend('original function','available data','MLP','location','northwest')

Data generator

type data_generator

%% Data generator function

function [X,Xtrain,Ytrain,fig] = data_generator()

% data generator

X = 0.01:.01:10;

f = abs(besselj(2,X*7).*asind(X/2) + (X.^1.95)) + 2;

fig = figure;

plot(X,f,'b-')

hold on

grid on

% available data points

Ytrain = f + 5*(rand(1,length(f))-.5);

Xtrain = X([181:450 601:830]);

Ytrain = Ytrain([181:450 601:830]);

plot(Xtrain,Ytrain,'kx')

xlabel('x')

ylabel('y')

ylim([0 100])

legend('original function','available data','location','northwest')